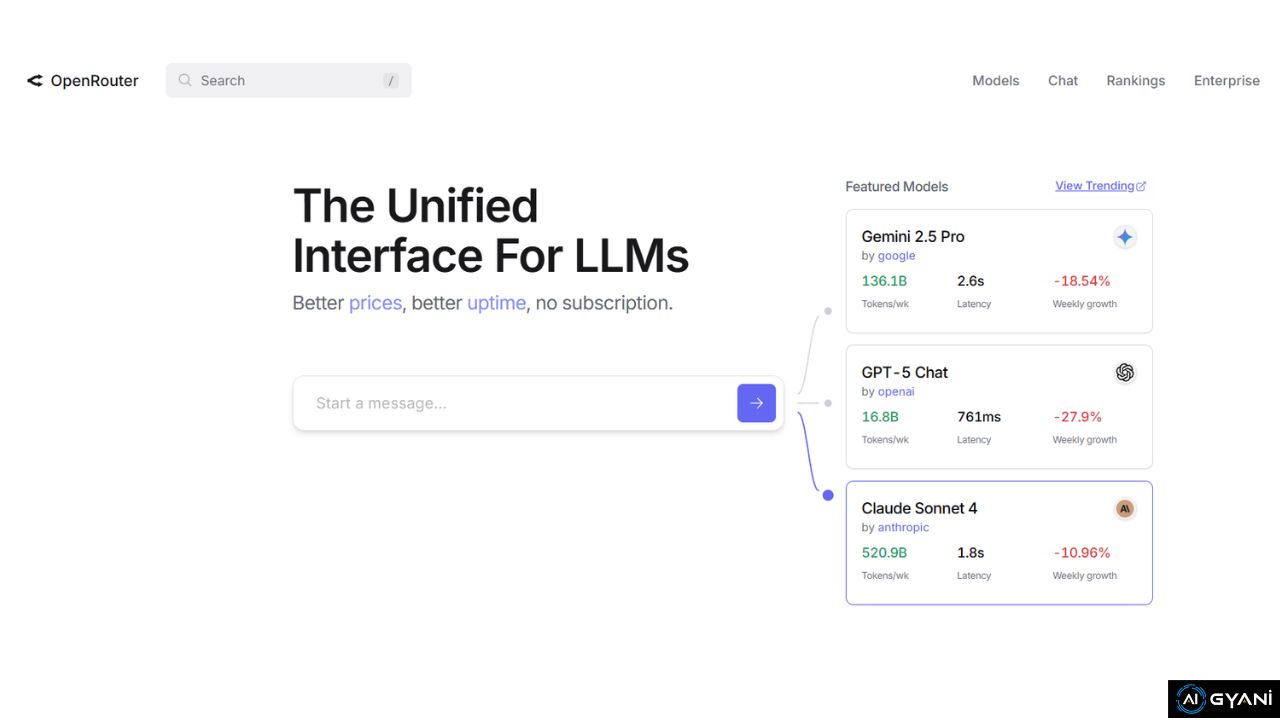

With the rapid rise of AI models from providers like OpenAI, Anthropic, Google, and more, developers often face a challenge: managing multiple APIs, varying costs, and uptime reliability.

OpenRouter AI solves this by offering a single, unified API for all major LLMs. It combines better prices, higher availability, and simplified access — without the need for separate subscriptions.

OpenRouter AI Overview

| Tool Name | OpenRouter |

| Category | AI Infrastructure / LLM API Gateway |

| Key Features | Unified API for multiple models, OpenAI-compatible, 400+ models, higher availability, fallback routing, custom data policies, transparent pricing |

| Official Website | openrouter.ai |

What is OpenRouter?

OpenRouter is essentially the hub for all major AI models, allowing developers to access 400+ LLMs from 60+ providers with just one API key. Instead of signing up and paying separately for each model (e.g., GPT-5, Claude Sonnet, Gemini 2.5), developers can buy credits on OpenRouter and spend them across different providers seamlessly.

Key Features

- One API for Any Model

- Connects you to models from OpenAI, Anthropic, Google, Mistral, and more.

- Fully OpenAI-compatible, so existing codebases integrate easily.

- Higher Availability

- Distributed infrastructure ensures reliability.

- Automatic fallback to other providers when one goes down.

- Transparent Pricing & Performance

- Keep costs predictable while maintaining speed.

- Runs at the edge, adding only ~25ms between users and inference.

- Custom Data Policies

- Fine-grained controls ensure prompts only go to trusted providers.

- Enterprise-level compliance and security.

- Ecosystem of Models & Rankings

- Explore leaderboards and usage stats across models.

- Benchmark performance, accuracy, and adoption rates.

Pros and Cons

✅ Pros

- One API key for 400+ models (huge convenience).

- No subscription model — pay-as-you-go credits.

- Automatic fallback increases uptime reliability.

- Transparent, competitive pricing.

- OpenAI SDK compatibility for easier integration.

❌ Cons

- Requires buying credits in advance (no postpaid billing).

- Some developers may prefer direct contracts with providers.

- Edge latency (~25ms) might matter in ultra-low-latency applications.

Use Cases

- Developers & Startups: Quickly experiment with multiple models without vendor lock-in.

- Enterprises: Ensure uptime by routing workloads to the best available provider.

- Researchers: Compare model accuracy, speed, and cost side-by-side.

- App Builders: Use one API to support multiple LLMs in user-facing applications.

Pricing

OpenRouter operates on a credit system:

- Buy credits once and use them across any model or provider.

- Example pricing shown:

- $10 = ~30M tokens

- $99 = ~300M tokens

- Prices vary depending on the specific model (e.g., GPT-5 vs Claude vs Gemini).

This flexibility means you don’t need multiple subscriptions — just a single pool of credits.

OpenRouter AI Review

OpenRouter is an excellent solution for developers and organizations who want to avoid vendor lock-in, reduce downtime, and optimize AI costs.

It offers one of the most developer-friendly ways to integrate multiple LLMs into apps, experiments, or enterprise systems.

While some may prefer going directly to OpenAI or Anthropic for guaranteed SLAs, OpenRouter’s unified access, reliability, and competitive pricing make it a strong alternative — especially for teams juggling multiple models.