Debugging AI agents has always been one of the most frustrating challenges for engineering teams. Failures get buried in long logs, individual bugs hide among noisy traces, and recurring issues often go unnoticed until users complain.

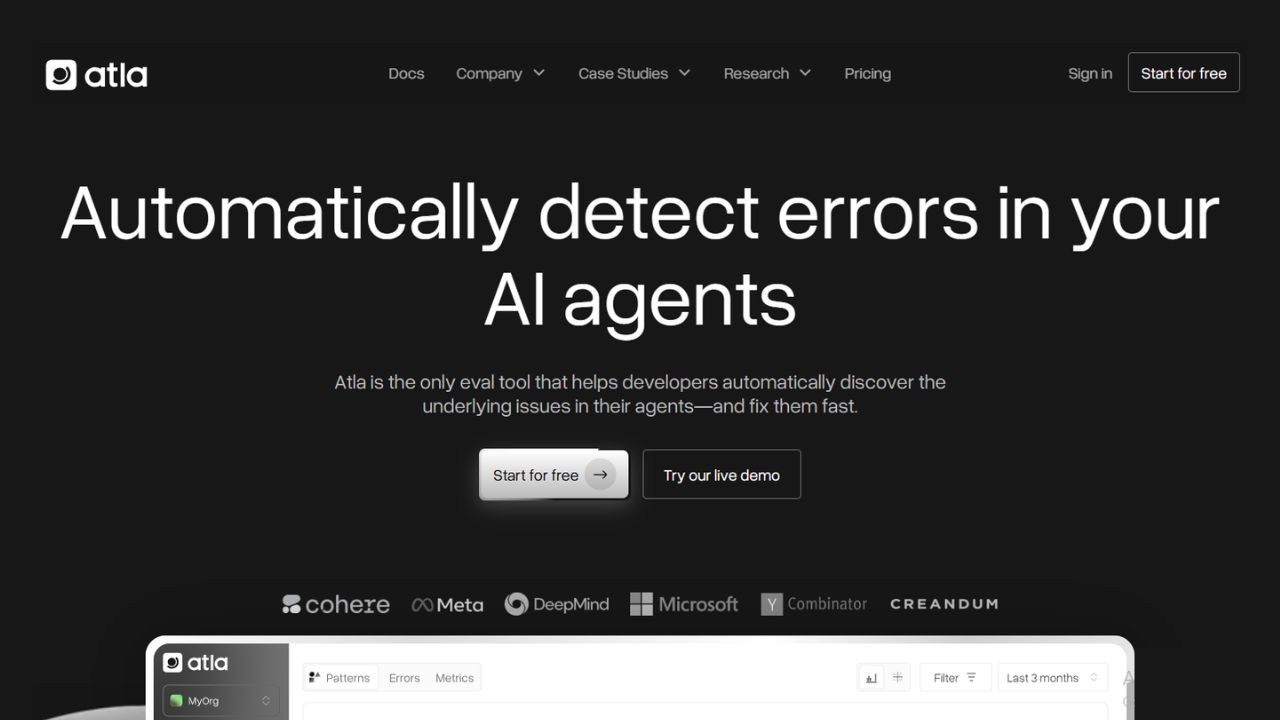

That’s why we built Atla AI—the first evaluation tool designed to automatically detect underlying issues in your AI agents, surface failure patterns, and help you fix them before they reach users.

The Problem with Debugging AI Agents

Most teams know the pain:

- Failures are hidden in massive logs.

- Time is wasted manually sifting through traces.

- Recurring patterns get lost in the noise.

- Monitoring tools catch single bugs, but miss systemic issues.

As a result, engineers spend hours (or even weeks) firefighting issues, while users end up facing unreliable AI experiences.

The Atla AI Solution

Atla AI changes the game by automatically detecting failures at the step level and clustering them into recurring patterns. Instead of reacting to endless bug reports, you can prioritize and fix what matters most—fast.

With Atla, you can:

🧩 Detect Failure Patterns

Uncover high-impact recurring failures so you know where to focus engineering time.

🔍 Pinpoint Root Causes

Get step-level annotations of errors that show why failures happen.

🕵️ Chat with Your Traces

Interact with traces in plain language—ask questions, surface patterns, and validate suspicions with data.

🛠 Generate Fixes

Receive targeted, actionable recommendations specific enough to turn into small pull requests.

⚡ Integrate with Coding Agents

Send fixes directly to tools like Claude Code or Cursor for autopilot implementation.

🧪 Test & Validate

See exactly how prompt edits, model swaps, or code changes affect performance.

▶️ Run Simulations

Replay failing steps right inside the UI to confirm that your fixes actually work.

🎙 Go Multimodal

Extend error detection beyond text—Atla works with voice agents and more.

Why Atla Matters

Instead of chasing failures one by one, teams can now detect, prioritize, and fix systemic problems in hours—not weeks. Agent-first companies in industries like legal, sales, and productivity are already using Atla to save engineering time and ship more reliable AI experiences.

Atla isn’t just another monitoring tool—it’s a complete evaluation and debugging layer for AI agents at scale.

Final Thoughts

AI agents are only as good as their reliability. With Atla AI, you no longer have to wait for users to find bugs. You can automatically detect errors, understand failure patterns, and fix issues proactively—making your agents faster, smarter, and more dependable.

🚀 Atla is here to redefine AI reliability.