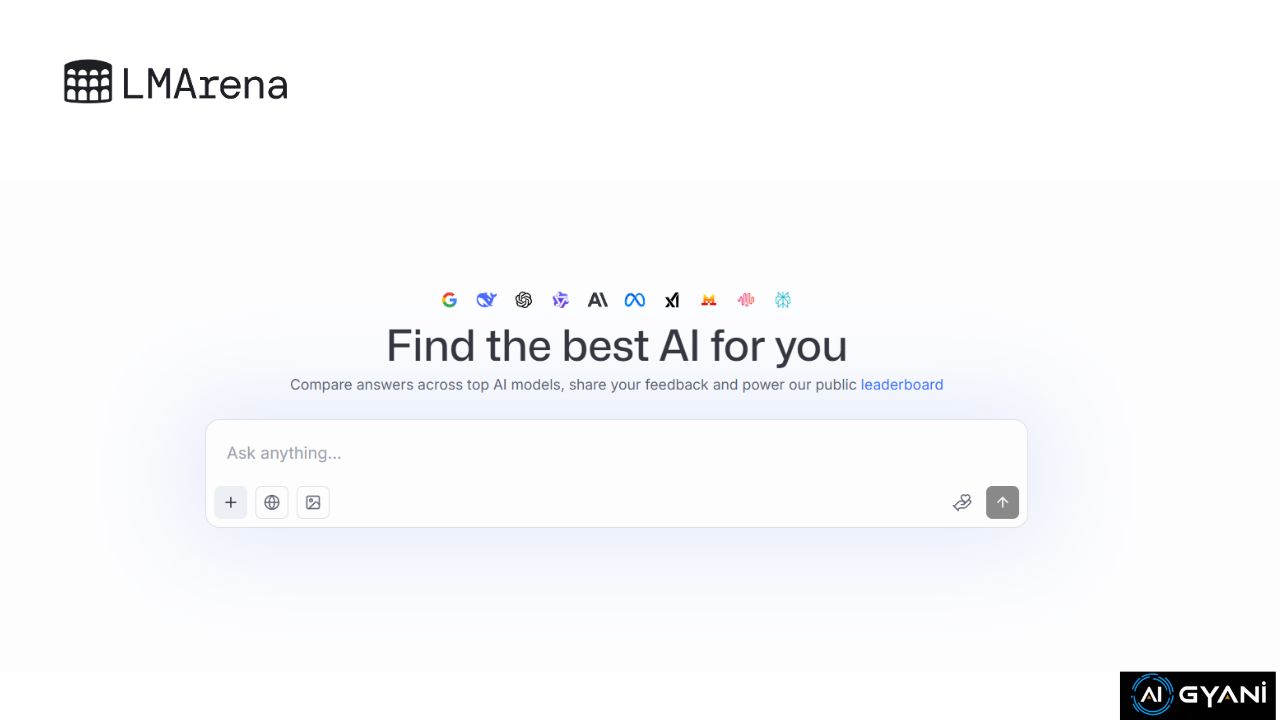

LMArena is a comparison and benchmarking platform for AI models. Its goal is to let users compare answers from different large models, submit prompts, see how various AI systems respond, and “battle” them to find out which model performs best for a given prompt. (Source: website)

It also maintains a leaderboard of top-performing models based on community feedback.

How LMArena Works / Features

- Model Comparison / Prompt Battles

Users can enter a prompt, then see how multiple AI models (Anthropic, Meta, Perplexity, etc.) respond side by side. This gives a direct comparison for quality, style, correctness, etc. - Leaderboard & Community Feedback

The platform has a public leaderboard where users vote or rank model responses. Models that perform well on popular prompts get higher visibility. - Account & Chat History Syncing

If you create an account, your chat history is saved across devices. - Transparency Warnings

The site discloses that inputs are processed via third-party AI, and the responses may not always be accurate. It also warns users not to share sensitive personal information because some content may be shared publicly to support the community.

Pros & Cons of LMArena

✅ Pros

- Allows side-by-side comparison of AI model outputs, helping users see strengths and weaknesses.

- Community feedback / leaderboard provides social proof and comparative ranking.

- Saves chat history (with account) for reference across sessions.

- Useful for prompt engineers, AI enthusiasts, or people evaluating model performance.

❌ Cons / Things to Watch

- Model answers may be inconsistent or incorrect—comparison doesn’t guarantee correctness.

- Privacy is a concern: the platform states that user inputs and conversations “may be disclosed publicly or to relevant AI providers.”

- It depends on third-party models—so latency, availability, or cost of those models may affect experience.

- If a model isn’t integrated, you can’t test it.

Use Cases & Ideal Audience

- Prompt engineers who want to test and refine prompts across multiple models.

- AI researchers / developers assessing model performance on specific tasks.

- Curious users who want to see how different AI models respond to the same query.

- Educators / trainers demonstrating model behavior differences in classrooms or workshops.