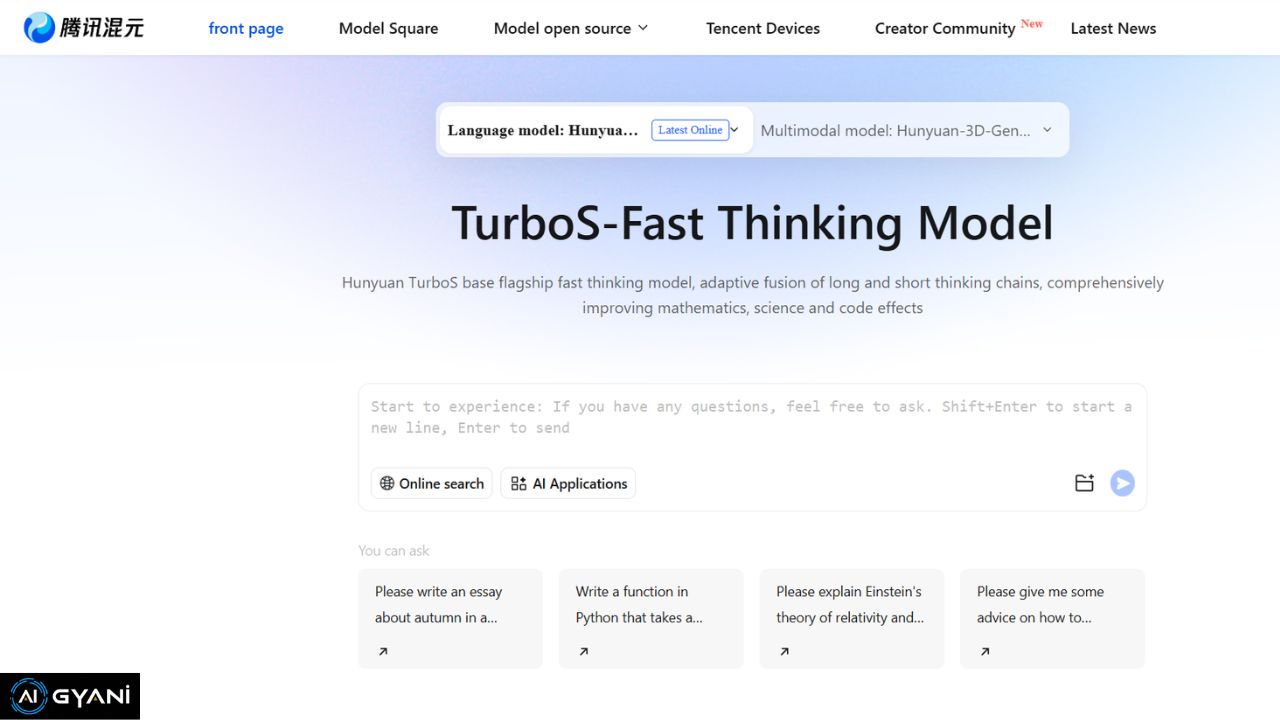

Tencent has officially released its first open-source Mixture-of-Experts (MoE) model, Hunyuan-A13B, marking a pivotal step in AI development.

With 80 billion total parameters but only 13 billion active at any time, the model embodies a “smaller, faster, better” philosophy—delivering powerful performance while drastically reducing compute load.

This MoE design means only a fraction of parameters are activated for any task, optimizing compute without sacrificing quality. The result? Fast responses, efficient resource usage, and smooth deployment—even on mid-range GPUs.

Designed for Depth, Not Just Speed

Hunyuan-A13B isn’t just efficient—it’s intellectually adaptive. Whether handling prompts like “Give me tips to stay focused while working remotely” or complex travel planning comparisons, the model naturally adds structure, logic, and insights—often going beyond what’s requested. Built-in capabilities include:

- Dynamic reasoning & logical analysis

- Tool integration via natural language

- Support for long-context reasoning (up to 128K tokens)

It also delivers consistently stable performance on extended inputs, like policy documents or analytical essays, without losing coherence.

Benchmark Performance That Speaks Volumes

Hunyuan-A13B delivers 2.2x–2.5x throughput compared to similar open-source models at the same input/output scale. But Tencent went further, launching two new benchmarks alongside the model:

- ArtifactsBench – Evaluates code generation in visual/interactive contexts

- C3-Bench – Tests agentic reasoning and vulnerability handling

These tools prove Hunyuan isn’t just smart on paper—it handles agentic, real-world tasks like tool use, conversation, and reasoning exceptionally well.

Open, Accessible, and Built for Developers

A major strength of Hunyuan-A13B is its accessibility. It’s optimized to run on affordable consumer hardware and tailored for:

- Independent developers

- SMEs seeking AI solutions

- Research groups focused on scalability

The model is available now on GitHub, Hugging Face, and via Tencent Cloud.

Why This Matters: Shaping the AI Future

Tencent’s release sets a new standard in open-source AI:

- Efficiency over brute force: Outperforms some larger models while using fewer resources.

- Collaborative development: Tencent is enabling a shared ecosystem, offering both models and evaluation tools.

- Economic inclusion: With low hardware demands, AI development becomes viable even for small-scale teams and startups.

By reducing entry barriers and pushing innovation on all fronts, Hunyuan-A13B may be the most practical open-source AI model yet. The future isn’t just bigger—it’s smarter, faster, and more open.

How to Use Hunyuan-A13B

Try it out:

🔗 hunyuan.tencent.com

🔗 GitHub Repo

🔗 C3-Bench

🔗 ArtifactsBench